Introduction: Azure Arc Operations

Getting the Azure Arc agent deployed to your servers is step one - the work begins afterward. Based on my experience managing 10,000+ Arc-enabled servers, I can tell you that post-deployment operations determine whether your Arc implementation succeeds or becomes a management problem.

Azure does many things for you during deployment, but it does not keep your agents alive and healthy in production. That's entirely on you.

If you only measure rolled out agents, then you're doing it wrong. Arc deployment success isn't about agent count - it's about functional integration across your entire ecosystem.

Prevent operational gaps with our systematic Azure Arc Operations Checklist - 18 weekly tasks plus 3 monthly strategic reviews. Subscribe to our newsletter and get the complete checklist covering compliance, monitoring, automation, and maintenance - ensuring nothing falls through the cracks in your Arc operations.

What You'll Actually Face

Even with perfect deployment automation, you'll encounter these situations:

- Less than 1% of machines may fail mysteriously during onboarding or extension deployment, and that's with good automation

- Monthly agent updates from Microsoft that you must deploy or face reliability issues

- Extension version drift causing inconsistent behavior across your fleet

- The 45-day cliff - disconnected agents expire and require complete re-onboarding

- Cross-system dependencies - Arc isn't isolated; it integrates with Defender XDR, Sentinel, backup systems, and monitoring solutions

- External service failures - Microsoft's own services can fail, requiring operational procedures that account for scenarios beyond your control

What This Article Covers

This is the fourth article in our Azure Arc series. If you haven't deployed Arc agents yet, start with our Azure Arc for Servers Implementation Guide which covers architecture planning and deployment strategies. Having covered deployment strategies in previous articles, and data sources in our Azure Arc Data Sources article, we now focus on the ongoing operational challenges. Future articles will cover agent installation methods and deployment automation.

1. Lifecycle Management - Complete agent lifecycle from onboarding to offboarding

Onboarding & Management Phase:

- Monthly agent update process documented

- Process documented for handling failed onboarding or operations

- Escalation path for Arc operation failures documented

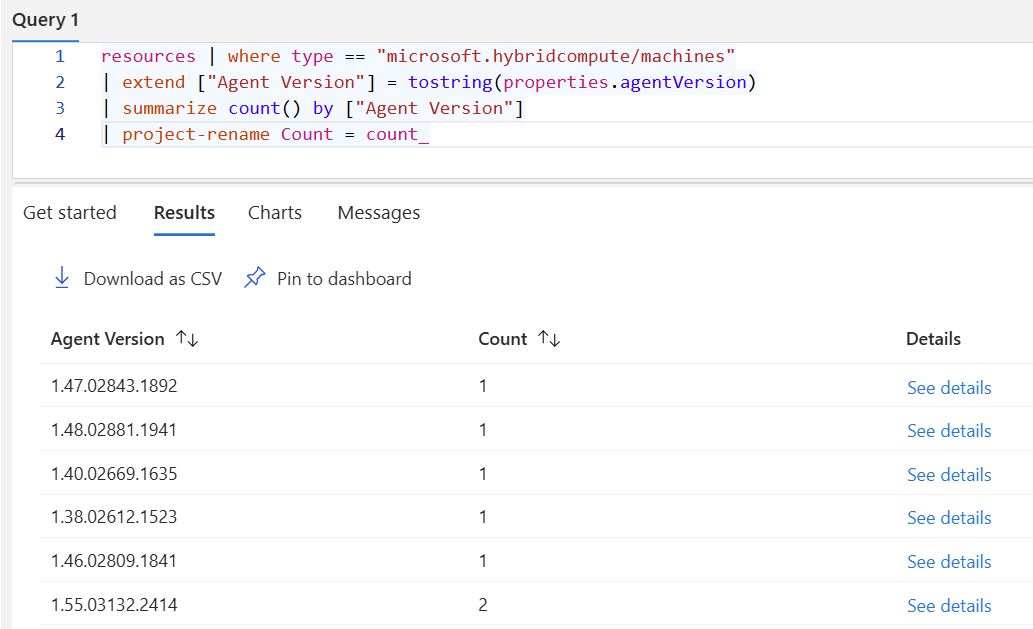

- Agent version tracking and remediation process documented

Offboarding Phase - The forgotten half of lifecycle management:

- Arc object cleanup process documented

- Cross-system offboarding automation implemented

- Connected services validation process documented (CMDB, AD, EDR/AV, Defender XDR)

- Server decommissioning workflow documented across entire ecosystem

- Orphaned object prevention process implemented

2. Security vs Operations: Making the Arc Tradeoff - Understanding monitoring mode vs. full mode and the operational implications of security decisions

- Security mode decision criteria documented

- Security mode validation process across fleet implemented

- Extension allowlist and security policy management documented

- Security mode compliance checking process documented

- Security exception handling and documentation process documented

3. Health Monitoring - Detecting issues before they cause other problems

- Cross-system data flow monitoring implemented

- Agent disconnection alerts configured and tested

- Investigation process documented for Arc/service health mismatches

- Response time targets documented for failure scenarios

- Activity log correlation process implemented

4. Automation Strategies - Building self-healing systems that handle the small failure rate

- Tag management automation and data accuracy documented

- Cross-reference validation between AD and Arc implemented

- Automation solution management process documented

- Automation failure handling process documented

- Automated process validation and monitoring implemented

5. Troubleshooting - Practical diagnostic and remediation guidance (coming soon in future update)

These are sample questions to help identify operational gaps - not a final checklist.

This guide is based on real-world experience with enterprise Arc deployments where "good enough" isn't acceptable.

1. Lifecycle Management

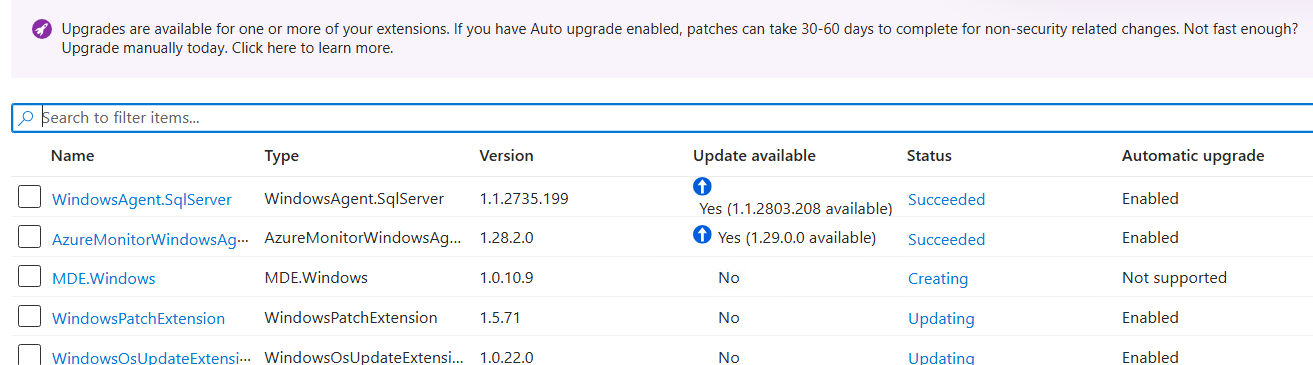

You deploy 1,000 Arc agents. Microsoft releases monthly updates. Some agents update automatically, some don't. Extensions get corrupted and stuck in permanent "Installing" state. You end up with 47 different agent versions across your fleet and 12 broken extensions that can't be uninstalled through any normal method.

This happens when teams ignore the small monthly failure rate across multiple layers - 10 agents fail updates this month, 8 extensions get stuck next month, 5 servers stop sending logs to Sentinel, 3 Defender enrollments break. Over 12 months of accumulated neglect, these small failures compound into operational chaos. It's not just agent updates - it's extensions, data flows, Defender enrollments, update installations, and policy applications all failing at small rates that add up when ignored.

The biggest operational problem isn't deploying Arc agents - it's keeping them healthy, updated, and functional across thousands of servers month after month. Monthly agent updates from Microsoft, extension management across different services, version drift that creates inconsistent behavior, and handling the inevitable failures that occur at scale.

When managing Arc agents at scale, the azcmagent command complexity becomes a daily problem. Every operation requires looking up syntax - version checks need azcmagent version --check, connectivity testing requires location parameters like azcmagent check --location "westeurope" --enable-pls-check, and configuration management involves separate commands for listing, getting, setting, and clearing different settings.

This gets complicated when you're doing bulk operations across hundreds of servers. You may need to help other people remotely. You want simple commands that work consistently, not cryptic parameter combinations you have to look up every time.

Microsoft provides the Az.ConnectedMachine PowerShell module with 35 commands for Arc operations like Connect-AzConnectedMachine and Get-AzConnectedMachine. However, this module focuses on Azure-side operations, not local agent management.

The command complexity led me to building the AzureArcConnectedAgentManagement PowerShell module - 15 commands that wrap the azcmagent complexity into something more usable. Commands like Get-AzureArcNodeAgentInformation replace having to memorize azcmagent show --output json --verbose. The module has over 7,000 downloads on PowerShell Gallery, which shows that other people face the same command complexity issues.

During one of our customer deployments, we saw immediately around 50-60 servers got stuck where extensions simply wouldn't install properly. The portal gets locked up and you can't do anything from the portal or API. Once extensions get into this stuck state, nothing can remove them:

- Extensions show "Updating" or "Installing" forever in Azure portal

- Can't remove through portal (operations timeout)

- Can't remove via PowerShell or CLI (fails silently or with errors)

- Portal state gets completely locked

- Restarting services doesn't fix the issue

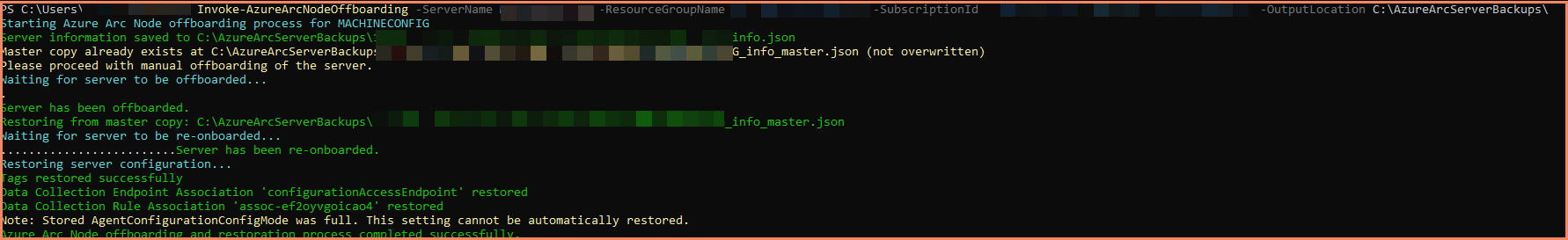

After encountering similar issues with another customer, I wondered how to simplify the re-onboarding pain. Microsoft doesn't offer any solution for this today, so I came up with the Azure Arc Re-Onboarding Assistant. This is the current version I built - there are definitely many other things to add to that tool, but for now it stays like that.

The tool captures tags, data collection rule associations, and data collection endpoint associations to a JSON file. It creates two backup files: a master backup (created once) and a current backup (updated each run). The tool guides you through manual offboarding and then restores the configuration after re-onboarding.

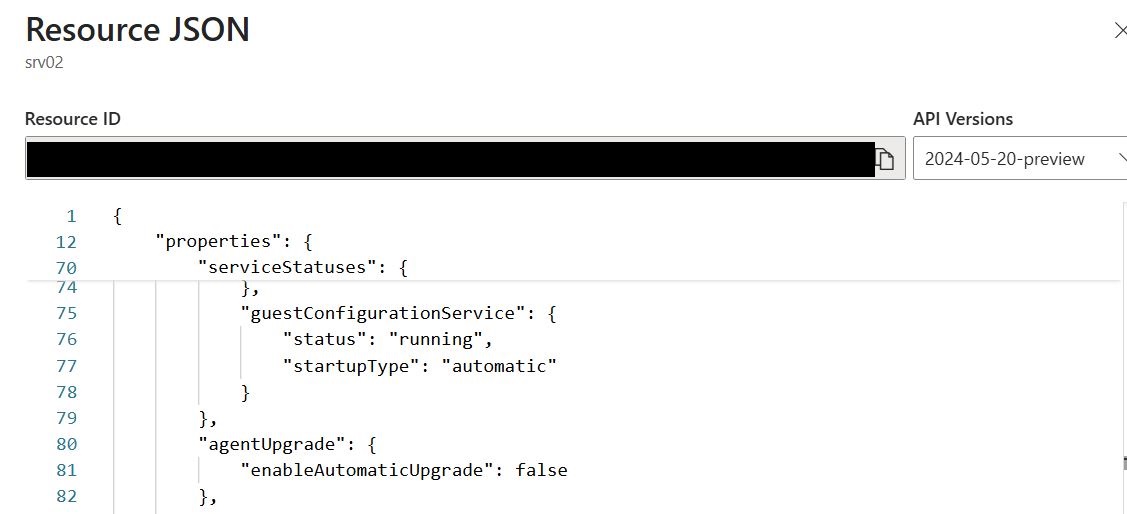

The tool is available as the AzureArcReOnboardingAssistant PowerShell module with commands like Invoke-AzureArcNodeOffboarding for full operations and restore-only capabilities using specific backup files. It also notes the AgentConfigurationConfigMode setting during backup.

For agent updates, Microsoft announced a new way of keeping your agents updated called agent auto upgrade that must be enabled through the API for now. I'm not yet sure if it is a good idea moving forward to turn that on for all customer sizes and projects, but time will tell.

Looking back, Arc agent updates haven't caused any major issues on their own, and when it comes to Arc agent updates, it's just a standard update during patch Tuesday - nothing complex. But if you don't use Azure Update Manager, then make sure that Arc agent updates are done correctly every month and agents are updated. My experience shows that customers who are using other solutions have completely missed the agent updates. If your agents get old, no new features, security updates are missing, more issues with extensions. Keep your agents updated.

To sum it up: track agent versions and keep them updated, build proper in-house workbooks or check out our solution catalog for Arc workbook.

Offboarding Phase (The One We Don't Want to Even Whisper)

People are excellent at spinning up new infrastructure but terrible at cleanup. When someone claims they're doing offboarding "manually," it's usually not happening consistently across all systems. What actually occurs during server decommissioning:

- Server gets decommissioned

- Arc object might get deleted (if remembered)

- Defender XDR device object stays orphaned (can't delete from portal anyway)

- CMDB entry remains stale

- AD computer object might be left behind

- Third-party EDR/AV still has the device record

- Monitoring systems still expect data from dead servers

The result: dead objects everywhere that eventually hit expired states, making it impossible to distinguish what's actually active infrastructure versus what's dead. This leads to different numbers in different systems - and there are many memes and posts about this exact problem because it's so common.

Arc isn't isolated - it's connected to multiple systems that each maintain their own device records:

Azure Side:

- Arc server object (can be deleted via API/portal)

- Associated extensions and configurations

- Policy assignments and compliance records

Identity and Management Systems:

- Active Directory computer objects

- CMDB entries and asset records

- Configuration management tool records

Security Systems:

- Defender XDR device objects

- Third-party EDR/AV platform records

- Security policy assignments

- Compliance and vulnerability scan records

Monitoring Systems:

- Log Analytics workspace expectations

- Azure Monitor alert rules

- Custom monitoring solutions

Manual coordination across all these systems doesn't scale and isn't reliable. You need automation that handles the entire ecosystem.

Automation-First Approach

The solution is Azure Automation + Hybrid Runbook Worker to extend automation capabilities beyond just Azure to other clouds and on-premises systems. This gives you end-to-end capabilities instead of being limited to Azure-side cleanup.

Hybrid Runbook Worker Benefits:

- Execute PowerShell scripts in your on-premises environment

- Access to internal systems (AD, CMDB, third-party APIs)

- Coordinate cleanup across multiple platforms

- Handle authentication to various systems

- Provide logging and error handling across the entire process

Example Automation Workflow:

This approach ensures consistent offboarding across your entire infrastructure ecosystem, not just the Azure Arc object.

Practical Offboarding Note

You may wonder: if I need to decommission or shut down a server permanently, do I need to run azcmagent disconnect? The answer is no. If the server is offline, you can just delete the object from the Azure portal and you're good to go from Azure's perspective.

But as mentioned before, think about it from an end-to-end perspective. Use diagram tools to write down the process and map all connected systems, then write the code to handle the complete workflow. The Arc object deletion is just one step in a larger decommissioning process.

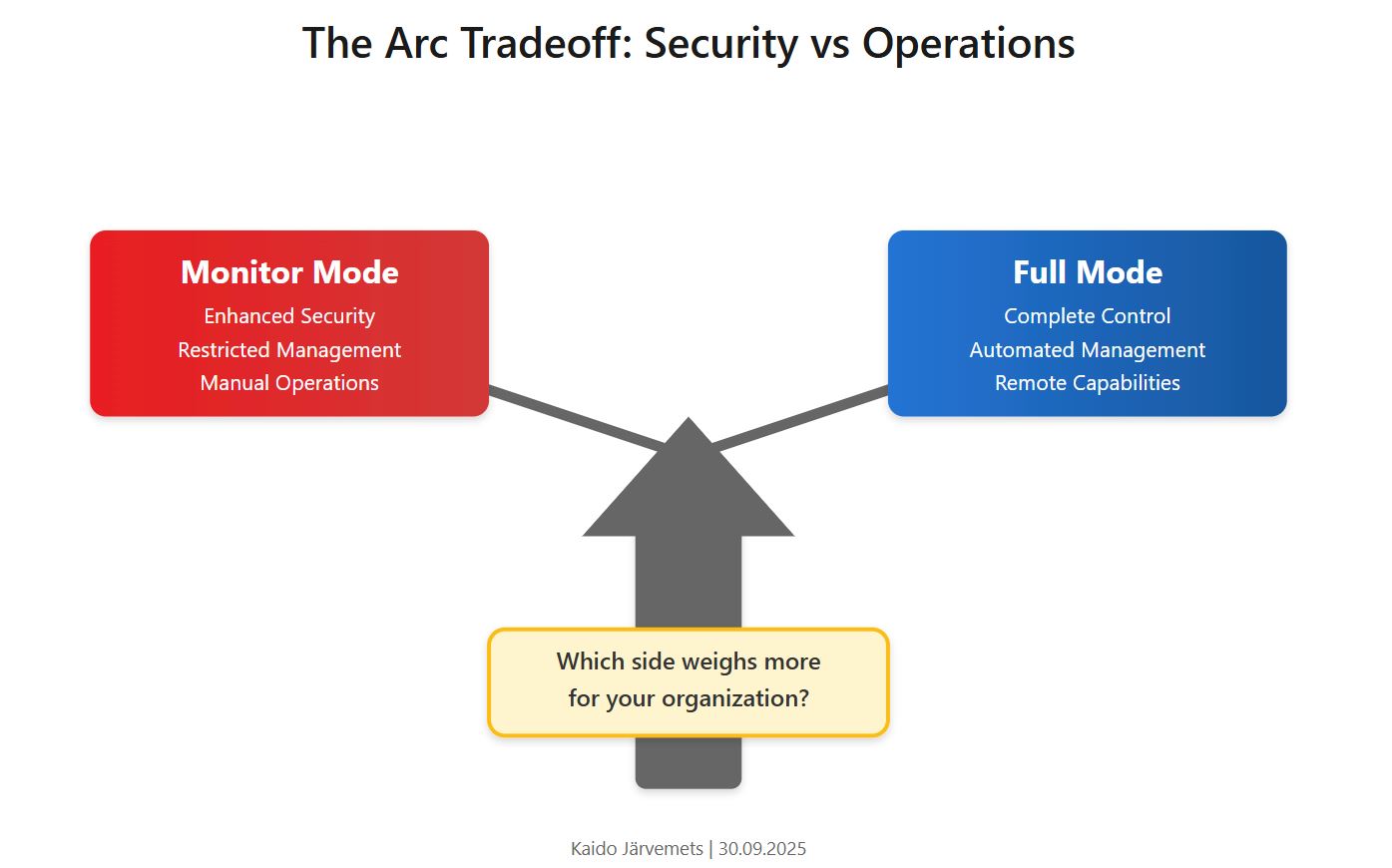

2. Security vs Operations: Making the Arc Tradeoff

Now that we've covered the basic agent management operations, let's talk about something more technical. If you've been following Azure Arc discussions on social media, you've probably seen the ongoing debate about security modes. Before you move forward with Arc deployment - or if you didn't fully understand how the Arc agent operates and what its capabilities actually are - you need to make this fundamental decision.

What does Arc actually mean for your organization? This isn't about technical capabilities - it's about operational reality.

Arc fundamentally changes your server management model. Instead of logging into servers, using RDP, or deploying agents manually, you now have centralized Azure-based management. This means your server operations team can deploy software, run scripts, collect logs, and configure systems from Azure portal or PowerShell - without ever touching the actual servers.

But here's where security architects often miss the point: If you disable Run Command, block extensions, and lock everything down in monitor mode, then HOW are you actually going to manage those servers? You're back to RDP, manual logins, and local management - exactly what Arc was supposed to solve.

The operational questions you must answer:

- If we block Run Command, how do we deploy emergency patches? Going back to RDP defeats the purpose of Arc

- If we use monitor mode, how do we install software remotely? Manual processes don't scale

- If we disable guest configuration, how do we enforce compliance? Alternative tooling costs more and creates complexity

- If we restrict extensions, how do we deploy monitoring agents? You still need visibility into systems

Many security architects create "secure" Arc configurations that are operationally useless. They disable the very capabilities that justify Arc's existence, then wonder why operational teams resist the technology.

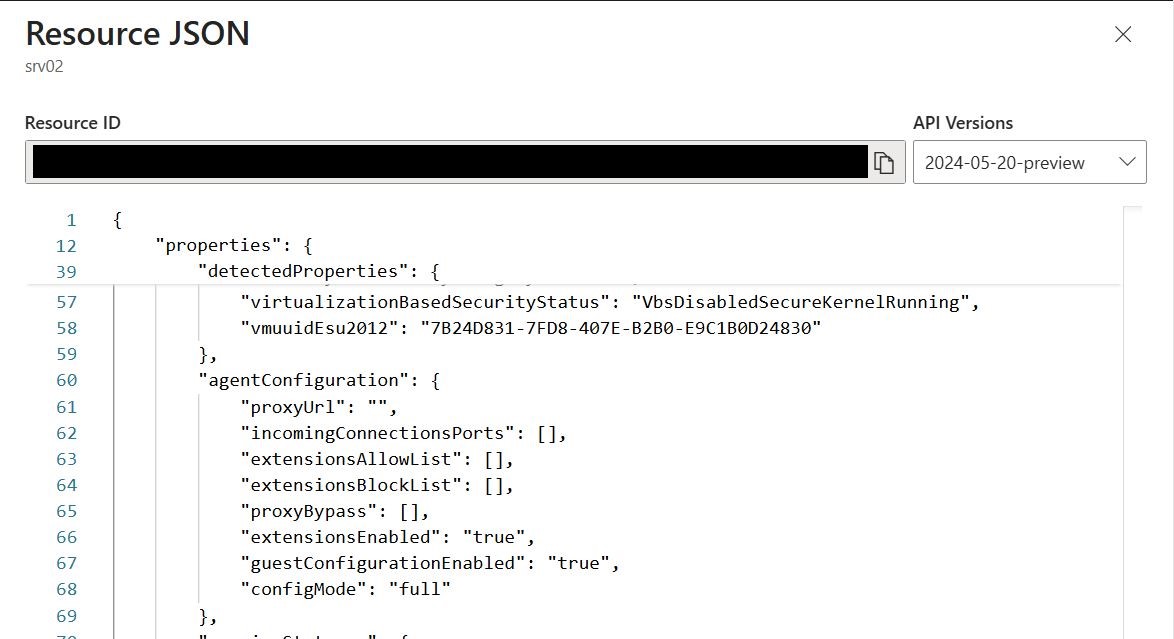

As covered in the Azure Arc for Servers Implementation Guide, the Arc agent comes in two modes. Full mode is the default and this will dictate what you can do with the servers that are connected to Arc. The mode you choose determines what management capabilities are available across your entire fleet. This isn't just a security decision - it's about what functionality you actually need versus what restrictions you can live with operationally.

Understanding the Two Modes

Full Mode (Default) enables all Azure Arc agent functionality - all extensions can be installed and managed, guest configuration policies work, Run Command is available, remote connectivity tools are enabled, and you have complete management capabilities. This is the out-of-the-box configuration that gives you maximum flexibility but also maximum attack surface.

Monitor Mode (Restricted) is designed for environments where you want monitoring capabilities but restricted management access. Only monitoring-related extensions are allowed (Defender for Endpoint, Azure Monitor Agent, Log Analytics, Dependency Agent, security monitoring agents, and Qualys vulnerability scanners). Guest configuration is disabled, Run Command is blocked by default, and remote management tools are restricted.

| Capability | Full Mode | Monitor Mode |

|---|---|---|

| Run Command | ✅ Yes | ❌ No (blocked) |

| Guest Configuration | ✅ Yes | ❌ No |

| Monitoring Extensions (AMA, MDE, Dependency, Qualys) |

✅ Yes | ✅ Yes |

| Other Extensions | ✅ Yes | ❌ Blocked unless allowlisted |

| Remote Management Tools | ✅ Yes | ❌ Limited |

For complete details on which extensions are considered "monitoring extensions" in monitor mode, see Microsoft's security extensions documentation.

The configuration commands are straightforward:

# Enable full mode (default, but can be set explicitly) azcmagent config set config.mode full # Enable monitor mode azcmagent config set config.mode monitor # Verify current mode azcmagent config list

Even in full mode, you can block specific extensions using blocklists. For example, to block Run Command specifically while keeping other functionality:

# Block Run Command extension (Windows) azcmagent config set extensions.blocklist "microsoft.cplat.core/runcommandhandlerwindows" # View current configuration azcmagent config list

You can view blocked extensions in the Azure portal by navigating to your Arc server → JSON View → check extensionsBlockList property.

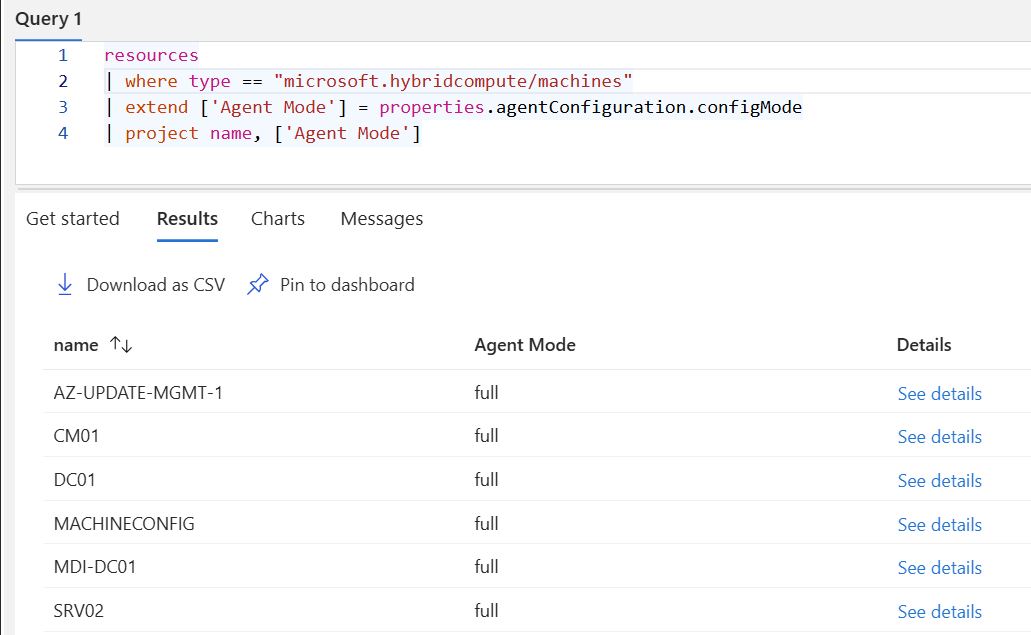

Operational Considerations and Fleet Monitoring

If you decide to block something like Run Command, make sure your team understands what you've blocked. Otherwise you might block your own daily operational needs. Blocking one method while leaving 10 other ways to achieve the same thing gives false security. People may still do things through other paths.

Figure out what you're trying to achieve operationally, then see how to implement that properly in production. Don't just randomly block features without understanding the full impact. Detailed security requirements are useless if they make your infrastructure unmanageable in daily operations.

To monitor configuration across your fleet, use these queries:

Azure Resource Graph Explorer:

resources | where type == "microsoft.hybridcompute/machines" | extend ['Agent Mode'] = properties.agentConfiguration.configMode | project name, ['Agent Mode']

From Log Analytics Workspace:

arg("").resources

| where type == "microsoft.hybridcompute/machines"

| extend ['Agent Mode'] = properties.agentConfiguration.configMode

| project name, ['Agent Mode']

You can also view through the Azure Portal by navigating to your Arc server → JSON View → check configMode and extensionsAllowList properties.

The Hidden Domain Admin Problem

Before choosing security modes, understand the broader governance implications covered in our Azure Arc for Servers Implementation Guide. Arc operators in full mode can achieve domain-admin-level impact on Tier-0 systems via Run Command and extension control.

This isn't unique to Arc - many system management solutions operate in SYSTEM context to perform their functions. Configuration Manager, Defender for Endpoint, backup agents, monitoring tools, and other enterprise management platforms all require elevated privileges to do their jobs effectively.

When you have Azure Arc managing domain controllers with Run Command enabled, your Arc operators can execute scripts on Tier-0 systems with SYSTEM privileges. This ties directly into your total governance model in Azure - who has access to what resources, at what scope, with what permissions.

The governance challenge: You might have 2 official Domain Admins, but also 3 Configuration Manager operators, 4 Defender for Endpoint operators, and 2 Azure Arc operators. That's 11 people with domain admin-level operational capabilities across different management platforms.

This isn't just an Arc problem - it's a comprehensive governance model problem that spans your entire hybrid infrastructure management approach.

Making the Right Choice

Use monitor mode when you only need monitoring data and don't require remote management capabilities. Use full mode when you need to actually manage servers remotely - deploy software, run scripts, apply configurations.

The practical problem: if you lock down servers with monitor mode but then need to deploy something or fix an issue, how are you going to do that? You'll either need to switch modes or find another way to manage those servers. Choose the mode based on what management capabilities you actually need, not theoretical security preferences.

Governance Integration: Your Arc security mode decisions should align with your overall Azure governance framework - management group structure, RBAC assignments, resource organization, and access policies. Security modes are just one layer in your comprehensive access control strategy.

The Smart Security Approach

Don't just blindly disable all functionality and call it "secure." BE SMART!

The real security comes from:

- Understanding the risks - Know what each mode actually allows and blocks

- Strong identity management - Proper RBAC, conditional access, and authentication

- Operational visibility - Comprehensive logging and monitoring of who does what

- Having the right people - Trained operators who understand the security implications

Monitor mode vs full mode is just one control. The bigger security picture includes identity governance, network security, endpoint protection, and operational procedures. A locked-down Arc agent means nothing if your identity system is weak or your operators don't understand what they're managing.

Choose security modes based on operational requirements and risk tolerance, not fear of functionality.

3. Health Monitoring - Yes it is more than agent :)

Once you've decided on your security mode and operational capabilities, you need to ensure those capabilities actually work in practice. This is where health monitoring becomes crucial - not just checking if agents are connected, but validating that your chosen Arc configuration delivers the operational results you need.

Get Agent Connection Monitoring Under Control First

Your primary goal is to get agent connection monitoring under control - do at least that foundation, then build everything else on top. The key question: what's your acceptable offline window before you need to know about it?

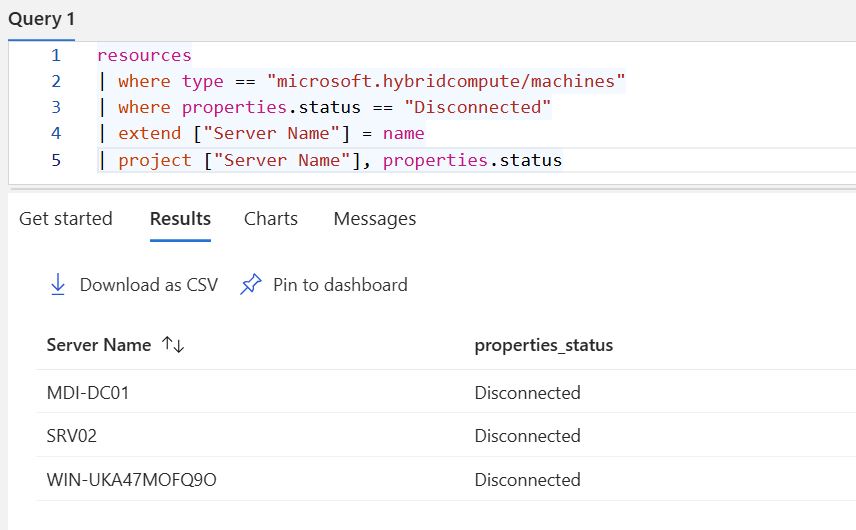

With Azure Resource Graph + Log Analytics integration (covered in detail in our Azure Arc Data Sources article), you can create Azure monitoring alerts that trigger when servers exceed your defined offline threshold:

arg("").resources

| where type == "microsoft.hybridcompute/machines"

| where properties.status == "Disconnected"

| extend Status = tostring(properties.status)

| extend ["Server Name"] = name

| extend ["Last Contact Date"] = todatetime(properties.lastStatusChange)

| where ["Last Contact Date"] <= ago(15m)

| project ["Server Name"], Status, ["Last Contact Date"]

But that connectivity check is only 25% of total health monitoring. It doesn't tell you if Azure monitoring agents accept new data collection rule associations, if data is actually flowing to Azure, or if Azure machine configuration profiles are applying successfully on your machines. You're measuring basic connectivity while missing operational functionality.

25% Coverage] --> B[Extension Health

50% Coverage] B --> C[Data Flow Validation

75% Coverage] C --> D[Cross-System Health

100% Coverage] A1[Arc Agent Connected] --> A B1[Extensions Installed] --> B B2[Extensions Running] --> B C1[Data Collection Rules Active] --> C C2[Expected Data Volumes] --> C D1[Defender XDR Sync] --> D D2[Sentinel Integration] --> D D3[Policy Compliance] --> D style A fill:#ffeb3b style B fill:#ff9800 style C fill:#2196f3 style D fill:#4caf50

What Health Monitoring Should Cover

At the end of the day, we need different criteria to measure if the underlying agent plus all services on top are functioning correctly:

Extension Health:

- Are all extensions actually installed and running (not just installation count)?

- What's the actual state of each extension?

- Are extensions stuck in permanent "Installing" state?

Just because an extension appears assigned doesn't mean it's functioning correctly. Use this query to check the actual provisioning states of extensions across your Arc fleet:

resources

| where type == "microsoft.hybridcompute/machines"

| project ServerName = tostring(name)

| join kind = inner (

resources

| where type == "microsoft.hybridcompute/machines/extensions"

| project ServerName = tostring(split(id, '/')[8]), ExtensionName = name, ProvisioningState = properties.provisioningState

) on ServerName

| project ServerName, ExtensionName, ProvisioningState

| where ProvisioningState != "Succeeded"

| sort by ServerName asc

This query identifies extensions that are assigned but not working correctly, helping you spot issues that could affect your Arc workloads.

Version Alignment:

- Are agent versions aligned across the fleet?

- Are extensions updated to supported versions?

- Do we have 47 different agent versions across our environment?

Operational Functions:

- Are servers scanning for updates successfully?

- Are update installations completing without errors?

- Are data collection rules actually collecting data?

- Are security events flowing from domain controllers to Sentinel?

Cross-System Data Flow:

- Is Log Analytics workspace receiving expected data volumes?

- Are Defender XDR device objects properly synchronized?

- Are policy compliance checks running and reporting correctly?

The Discovery Problem

You discover data flow issues when it's too late - like finding out you didn't get any security events from your domain controllers for the past two weeks when an incident kicks in. Without proper monitoring, you're operating blind.

During the MMA era, we experienced major West Europe region outages where Microsoft's storage infrastructure failed for days, requiring PowerShell automation to restart services across thousands of servers once recovered.

Even when you do everything correctly, external factors beyond your control will disrupt operations. Your operational procedures must account for scenarios where even Microsoft's own services fail. There's no failover to other regions - everything runs in one region and Microsoft provides no solution for that single point of failure.

Multiple Monitoring Routes

We have different tools and services to achieve the same monitoring goals using different routes:

Azure Monitor Alerts:

- Use KQL queries to define health conditions

- Attach action groups to kick off automated flows

- Built-in integration with Azure Resource Graph queries

- Templates available for common Arc monitoring scenarios

Custom Automation Routes:

- Azure Logic Apps for complex workflows

- Azure Automation runbooks for remediation actions

- Microsoft Sentinel for security-focused monitoring

- Custom PowerShell scripts for specialized checks

Azure alerts allow you to attach action groups and kick off the flows you need, but sometimes alternative routes give you more control over the monitoring and response logic.

Log Analytics Workspace Monitoring

Azure Log Analytics workspace is super important to dig out all the activities that really matter. But you need a proper monitoring plan - otherwise you discover critical data gaps when you need the data most.

Common Monitoring Blind Spots:

- Extension installation failures that never get reported

- Data collection rule associations that appear successful but collect no data

- Security agents that show "Running" but stopped sending events weeks ago

- Update management operations that fail silently

- Arc server offboarding that leaves orphaned monitoring configurations

Building a Monitoring Strategy

Make sure to have a proper monitoring plan that covers:

- Basic Connectivity - Arc agent to Azure (your 25%)

- Extension Functionality - All extensions working, not just installed

- Data Flow Validation - Expected data volumes reaching Log Analytics

- Cross-System Health - Defender XDR, Sentinel, and policy compliance

- Operational Workflows - Update management, configuration management, security monitoring

For comprehensive operational monitoring beyond these basics, our 21-Task Azure Arc Weekly Operations Checklist provides a systematic approach to monitoring all aspects of your Arc infrastructure. It covers everything from basic connectivity to advanced cross-system validation and remediation workflows.

Without comprehensive monitoring, you're managing infrastructure blind until something breaks badly enough to get your attention.

4. Automation Strategies

Monitoring shows you what's happening, but do you want to manually fix every issue it reveals? Of course not. Azure offers different automation tools that can act on monitoring signals. The goal is to connect the dots - make your monitoring signals work for you and engage automation when it's needed, rather than manually responding to every alert.

Every project teaches you something and every new project it's possible to push things forward. Different customers have different expectations and different maturity levels and understandings. Besides the PowerShell module and the Re-Onboarding Assistant mentioned earlier, I've built other automation solutions - four of them are available for our premium members.

If you don't automate what you can, then you don't have time to drink mojitos on the beach! 🍹

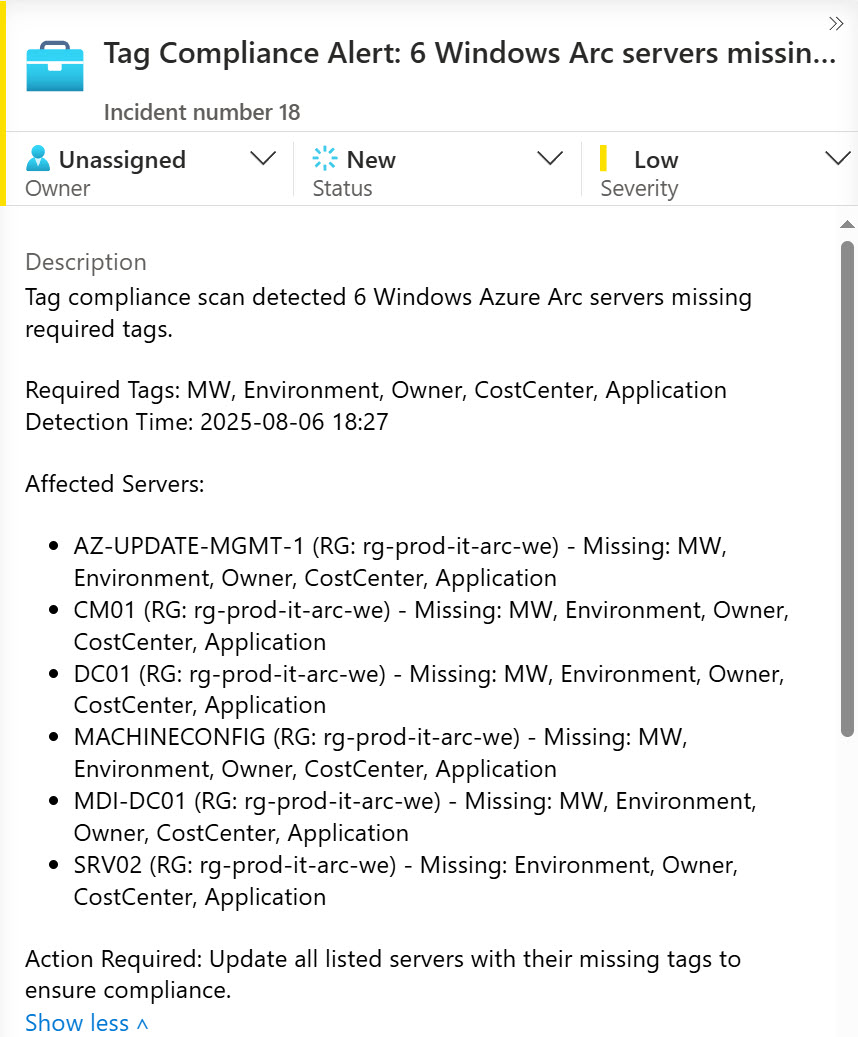

Azure Arc Tag Compliance Solution

As I've said many times and will say it more - tags are important but sadly totally under-used in many cases. This is something that requires time and training for the customer to understand why tags are important and what we can do with those things. There are other people in the Microsoft community like John Joyner and Cameron Fuller with different presentations from MMS about different use cases.

The Azure Arc Tag Compliance solution discovers which Arc servers are missing required operational tags and creates Microsoft Sentinel incidents so your team can fix them before operations break. When Arc servers get onboarded, operational details like maintenance windows, backup schedules, or environment classifications might not be known yet - and those missing tags may never get added later.

Once you start using tags, you need to monitor compliance. When tags are missing or wrong, create an incident in Sentinel. Do you need Sentinel incidents for everything? Of course not, but this shows what's possible when you actually monitor your tag compliance.

Azure Arc MDE Integration Solution

The Azure Arc MDE Integration solution addresses a specific pain point - having the MDE extension applied isn't enough. Is it actually enrolled in Defender XDR or not? When everything works correctly, the solution writes back status tags to the Arc object. When you're already in the Azure portal, you can see the Defender XDR enrollment data directly on the Arc server.

This solution correlates Defender for Endpoint data with Arc resources, automatically tagging servers with their onboarding status, threat detection status, and compliance state.

AD Cross-Reference Automation

People spin up new VMs and claim "I did one for testing" but it's still running in production and needs to be managed. Or maybe the service principal expired and onboarding stopped working altogether.

This solution polls Active Directory daily to see which domain-joined machines are onboarded to Arc and which aren't. It queries Active Directory for all domain computers, cross-references against Arc-enabled servers, and identifies machines that should be onboarded but aren't. It also finds Arc resources that don't correspond to actual AD objects (usually decommissioned servers that weren't properly offboarded).

When machines aren't onboarded but should be, create an incident in Sentinel.

Service Principal Monitoring

Based on the experience described in Stay Ahead with Azure Arc: Automate Expiry Alerts for Service Principal, monitor your Arc-related service principals. An expired service principal halts your entire Azure Arc onboarding process, preventing new server additions and disrupting your hybrid management setup.

The solution provides automated monitoring through Azure Logic Apps or Microsoft Sentinel integration to stay ahead of service principal expirations.

Conclusion

Azure Arc deployment is just the beginning - operational excellence determines long-term success. After managing 10,000+ Arc-enabled servers, the pattern is clear: organizations that treat Arc as "deploy and forget" end up with management problems, while those that implement proper operational procedures create reliable, scalable infrastructure.

The key operational pillars covered in this guide - lifecycle management, security modes, health monitoring, and automation strategies - aren't theoretical concepts. They're based on handling production issues at scale where small problems become significant operational challenges.

What separates successful Arc implementations:

- Clear ownership models - RACI frameworks where automation can be Responsible but humans remain Accountable

- Proactive health monitoring - Going beyond basic connectivity to validate cross-system data flow

- Automation-first mindset - Building solutions that handle the inevitable small failure rate consistently

- Security zone awareness - Understanding that Arc operators essentially become domain admins

The automation solutions referenced throughout this article - tag compliance monitoring, MDE integration validation, AD cross-reference checks, and service principal monitoring - exist because manual operational processes don't scale and aren't reliable.

Remember: Arc success isn't measured by agent count - it's measured by functional integration across your entire ecosystem. The organizations that implement systematic operational procedures outlined in this guide create infrastructure that actually works in production.

For systematic validation of your Arc operations, use the Azure Arc Operations Checklist - 18 weekly tasks plus 3 monthly strategic reviews. The complete checklist is available through email subscription below to ensure nothing falls through operational gaps.

Ready to Transform Your Infrastructure?

Azure Arc for Servers isn't just a technology decision - it's a strategic transformation that requires careful planning, expert architecture, and proven implementation methods. Don't let your organization struggle with common pitfalls or settle for suboptimal implementations.

Assessment & Strategy: Current state analysis, transformation roadmap, business case development

Architecture & Design: Security model design, network architecture, integration planning with existing systems

Implementation & Automation: Enterprise deployment, custom tooling development, phased rollout management

Knowledge Transfer & Support: Team training, operational procedures, ongoing advisory support